Welcome to Langboat Mengzi Community!

Langboat Technology provides learning guidance for developers who want to get started with NLP technology

Scan code to join Mengzi open source community

Scan code to join Mengzi open source communityIntroductory NLP Fundamentals Course

NLP Advanced Course

Technical Interpretation of Mengzi Model of Langboat Technology

On October 24, Langboat Technology released a lightweight Chinese pre-training language model - the Mengzi model, which consists of 4 models: BERT-style language understanding model, T5-style text generation model, financial analysis model and multiple models. Modal pre-training models are applicable to a variety of common application scenarios. Compared with the existing Chinese pre-training models on the market, the Mengzi model is lightweight and easy to deploy, and its performance surpasses models of the same size or even larger. In addition, the Mengzi model adopts a common interface, complete functions, and covers a wide range of tasks. It can be used not only for conventional language understanding and generation tasks, but also for vertical financial fields and multi-modal scenarios. This article will interpret the research framework and technical principles of the Mengzi model, trying to enable users to better understand and use the Mengzi model, and look forward to participating in the construction of the Mengzi model.

Mengzi Model#

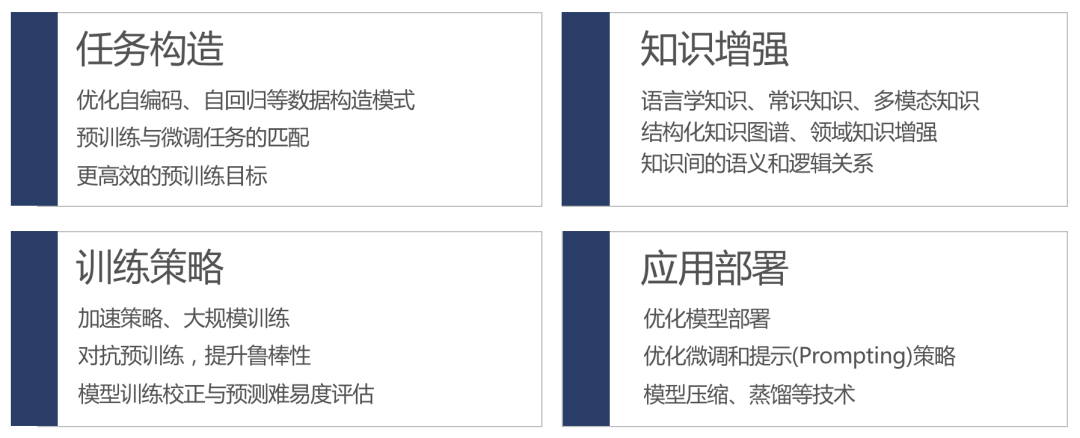

In recent years, large-scale pre-training technologies represented by ELMO and BERT have rapidly promoted the development of natural language processing and become a new paradigm of natural language processing. A series of pre-training strategies, model structure improvement, and training efficiency optimization techniques have been proposed to improve model training efficiency and performance. However, the explosive growth of the amount of model parameters and the amount of data required for training has led to a significant increase in the price of training. In practical task applications, giant models face problems such as inflexible adaptation to downstream tasks and high implementation costs. In addition, existing studies usually focus on English, while there are few related studies on Chinese. For text understanding, text generation, and application requirements in vertical domains and multimodal scenarios, how to build a Chinese model with strong performance under limited time and resource conditions is an important challenge.

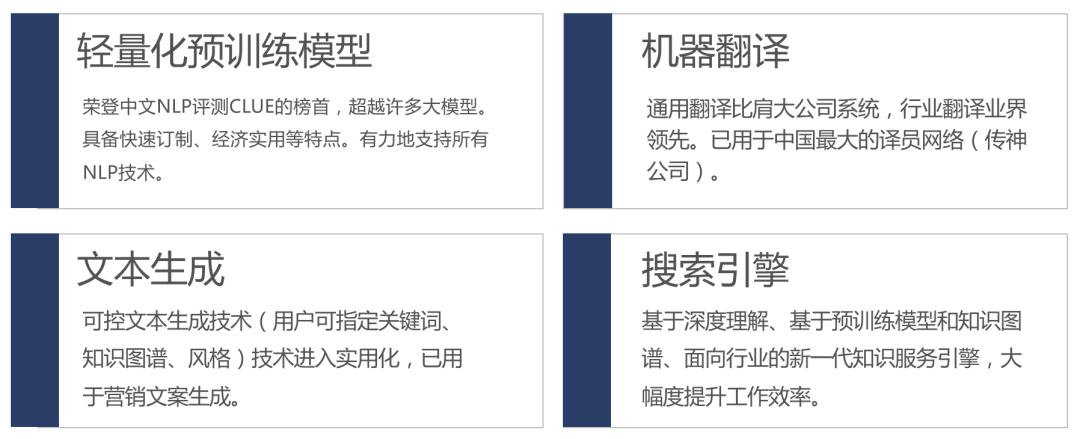

In order to better promote Chinese information processing and promote the application of natural language processing technology in a wider range of practical scenarios, Langboat Technology is committed to developing lightweight pre-training models, as well as advanced machine translation, text generation and industry Search engine, covering common fields such as text understanding (BERT-Style), text generation (T5-Style), financial analysis, multi-modal analysis, etc., and empowering industry customers through open source, SaaS, and customization, which is conducive to fast, Implement real-world business scenarios at low cost.

Next, we will explain the core technologies applied by the four models respectively.

Mengzi language understanding model (Mengzi-BERT-base)#

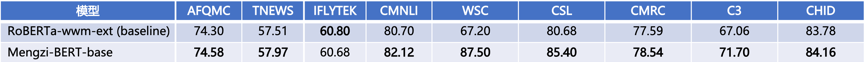

As the core component of language encoding, the Mengzi language model can not only be independently applied to language understanding, but also the cornerstone of the other three models—as the core language encoding module. In order to ensure versatility and facilitate application to downstream systems, the Mengzi model maintains the same model structure as BERT, focusing on the improvement of pre-training objectives and training strategies, and researching more refined, effective, and robust pre-training models[1] . Compared with other Chinese pre-trained models, the Mengzi model uses a segmenter optimized for Chinese, which can contain more Chinese vocabulary, process longer text, use less video memory, and have faster inference speed. In terms of data, high-quality, written-style corpus is selected for training, which can be used for tasks such as text classification, entity recognition, relationship extraction, and reading comprehension. In the CLUE evaluation, the Mengzi language understanding model has shown obvious advantages over the open source RoBERTa model.

-

- The overall research framework of the Mengzi language understanding model not only integrates widely proven effective target strategies, but also includes innovative pre-training techniques. The following mainly introduces three representative strategies: Explicit knowledge enhancement: The core of language modeling is to automatically capture knowledge from large-scale data. Knowledge is divided into explicit knowledge and tacit knowledge [2]. Explicit knowledge is a program or universal principle that can be expressed in words and numbers, easily communicated and shared in the form of hard data, and edited. Tacit knowledge is highly personal and difficult to format knowledge, including subjective understanding, intuition and hunches. Traditional NLP research often uses language annotation information as explicit knowledge to enhance language representation [3], and then better mine implicit knowledge in the hidden layer of the model, such as integrating part-of-speech tagging (POS) into word embedding representation (Word Embedding) and named entities (NER). In the era of pre-training models, existing studies have shown that pre-training models are still underfitting [4][5], and effectively introducing human prior knowledge or common sense can help improve the language understanding and reasoning capabilities of the model[6][7][8]. Inspired by multi-task linguistics enhancement research [9], the tagging tasks of POS and NER are used as auxiliary tasks of language modeling for joint training. Specifically, we use SpaCy to perform part-of-speech tagging and named entity recognition on the input text, and use the recognized target labels as prediction targets for training. Finally, the original loss based on mask modeling (MLM) and the prediction loss of POS and NER are added and summed up as the final loss.

-

- Sequence relational goals: Text understanding tasks usually involve inter-sentence or discourse relations. Many studies have explored inter-sentence relational modeling methods such as Next Sentence Prediction (NSP) and Sentence Order Prediction (SOP) [10]. We found that incorporating the training task of SOP helps to further improve the model performance.

-

- Training deviation correction: Our research found that the widely used MLM strategy will replace the original words with special symbols ([MASK]) in a certain proportion of the original text, which will damage the original sentence structure to a certain extent and increase the prediction Difficulty, but does not conform to the real sentence form, so it may cause a bias in modeling the semantics of the original sentence. To deal with such problems, we propose a series of strategies to correct the training gradient, restore the real and legal sentence structure more accurately, and improve the robustness of the model.

Mengzi Financial Model (Mengzi-BERT-base-fin)#

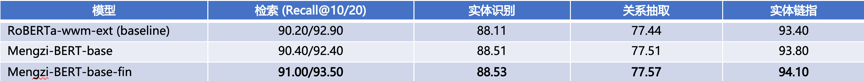

At present, various Chinese open source models are mostly oriented to general fields, and there is a lack of corresponding open source models in vertical fields including finance. In order to meet the corresponding needs, we continue to train on the financial corpus based on the Mengzi language model model and parameters. The corpus covers financial news, announcements and research reports, etc., and can be used for tasks such as financial news classification and sentiment analysis of research reports. In the financial field tasks, compared with the open source RoBERTa benchmark model, the Mengzi financial model has achieved significant performance improvement.

Mengzi text generation model (Mengzi-T5-base)#

ƒ Based on the Encoder-Decoder architecture adopted by T5, in addition to the Mengzi language understanding model as the encoder, a decoder structure of the same scale is added, and the denoising strategy of T5 is used in the training method, which has good training efficiency. Compared with the BERT and GPT models, the Mengzi text generation model can better serve the needs of controllable text generation, and can be applied to copywriting generation, news generation, and research report generation.

Mengzi multimodal model (Mengzi-Oscar-base)#

Due to the scarcity of open source multimodal models suitable for Chinese, we developed the Mengzi multimodal model to meet the practical application needs in the multimodal field. The Mengzi multimodal model adopts the Oscar multimodal architecture and is trained based on image-text alignment data. The language encoding module uses the Mengzi language understanding model and parameters, which can be applied to tasks such as image description and image-text mutual inspection.

Mengzi Open Source Community#

Langboat Technology embraces open source text and looks forward to growing together with the community. The models released for the first time are all alpha versions of the base, and feedback is welcome for imperfections! In addition to the technical details mentioned in this article, there are many cutting-edge technologies that are being studied in depth. After rigorously evaluating the stable version, we will further open source and release the latest results.

After the release of the Mengzi model, we received a lot of feedback. In order to better give back to the community, we have organized FAQs for frequently asked questions on GitHub and will continue to update them. We welcome your continued attention and feedback!

References::

[1] Zhuosheng Zhang, Hanqing Zhang, Keming Chen, Yuhang Guo, Jingyun Hua, Yulong Wang, Ming Zhou. 2021. Mengzi: Towards Lightweight yet Ingenious Pre-trained Models for Chinese.

[2] Michael Polanyi. 1958. Personal Knowledge: Towards a Post-Critical Philosophy.

[3] Xiaodong Liu, Yelong Shen, Kevin Duh, Jianfeng Gao. 2018. Stochastic Answer Networks for Machine Reading Comprehension. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 1694–1704.

[4] Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Mandar Joshi, Danqi Chen, Omer Levy, MikeLewis, Luke Zettlemoyer, and Veselin Stoyanov. 2019. RoBERTa: A robustly optimized BERT pre-training approach. arXiv preprint arXiv:1907.11692.

[5] Anna Rogers, Olga Kovaleva, and Anna Rumshisky. 2020. A primer in bertology: What we know about how BERT works. Transactions of the Association for Computational Linguistics, 8:842–866.

[6] Zhuosheng Zhang, Yuwei Wu, Hai Zhao, Zuchao Li, Shuailiang Zhang, Xi Zhou, and Xiang Zhou. 2020. Semantics-aware BERT for language understanding. In The Thirty-Fourth AAAI Conference on Artificial Intelligence, AAAI 2020, pages 9628–9635.

[7] Siru Ouyang, Zhuosheng Zhang, and Hai Zhao. 2021. Fact-driven Logical Reasoning arXiv preprint arXiv:2105.10334.

[8] Ruize Wang, Duyu Tang, Nan Duan, Zhongyu Wei, Xuanjing Huang, Jianshu ji, Guihong Cao, Daxin Jiang, Ming Zhou. 2021. K-adapter: Infusing knowledge into pre-trained models with adapters. In Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021, pages 1405-1418.

[9] Junru Zhou, Zhuosheng Zhang, Hai Zhao, Shuailiang Zhang.2020. LIMIT-BERT: Linguistics Informed Multi-Task BERT. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: Findings, pp. 4450-4461.

[10] Zhenzhong Lan, Mingda Chen, Sebastian Goodman, Kevin Gimpel, Piyush Sharma, Radu Soricut. 2020. ALBERT: A Lite BERT for Self-supervised Learning of Language Representations. In International Conference on Learning Representations.

Products

Business Cooperation Email

Address

Floor 16, Fangzheng International Building, No. 52 Beisihuan West Road, Haidian District, Beijing, China.

© 2023, Langboat Co., Limited. All rights reserved.

Large Model Registration Code:Beijing-MengZiGPT-20231205

Business Cooperation:

bd@langboat.com

Address:

Floor 16, Fangzheng International Building, No. 52 Beisihuan West Road, Haidian District, Beijing, China.

Official Accounts:

© 2023, Langboat Co., Limited. All rights reserved.

Large Model Registration Code:Beijing-MengZiGPT-20231205